The AI Pin Isn’t Dead: Humane’s Fall Ignited a Wearable AI Boom

In February 2025, Humane quietly pulled the plug on its much-hyped flagship, the AI Pin. The start-up sold key assets — including its software platform, patents and team — to HP for US $116 million. What once promised to be a screenless smartphone-killer is now considered by many a cautionary tale in the first wave of wearable AI.

Still — instead of declaring the concept dead, that shutdown may well mark its rebirth. Because around the world, a new generation of wearable-AI devices are being quietly built. And many investors, engineers and early adopters are watching.

When Humane launched the AI Pin in April 2024, it introduced a radical idea: what if you didn’t need a screen? What if your AI assistant could live as a small wearable clipped to your shirt — always with you, always listening, always ready — without the distractions of a smartphone?

In theory, it was a dream: gesture controls, voice-driven interaction, an AI co-pilot that could schedule meetings, send messages, summarise emails, take photos or even play music — all without a touchscreen or an app drawer.

But the reality didn’t deliver. Reviewers slammed the device for poor performance: sluggish responses, limited camera quality, overheating, short battery life, and a UI that was at times sluggish and imprecise.

Pricing was another hurdle: at US $699 (plus a subscription for AI connectivity and services), the AI Pin was as expensive as a premium smartphone — but offered far less utility.

By mid-2024, returns started outpacing sales. Many early adopters — former believers in the wearable-AI dream — decided it wasn’t worth the cost or compromise.

Humane raised $230 million before launch but reportedly sold fewer than 10,000 units, making it one of the most expensive hardware flops per unit sold.

At its core, the failure of AI Pin underscored a truth: smartphones represent more than just hardware. They’re hubs, ecosystems, backed by mature software, rich app stores, and entrenched user patterns. Displacing that — even with a shiny AI dream — would require flawless execution plus compelling, everyday value. Humane didn’t deliver.

The Device may have failed but the idea lives on!

Humane’s exit didn’t kill the wearable-AI dream. Instead, it created a reset — a reality check. And that’s exactly what the broader ecosystem needed.

Several observations make a case for a “second wave” of wearable AI:

- Technical maturity is rising fast. Academic and industry research, such as projects like Synergy: Towards On-Body AI via Tiny AI Accelerator Collaboration on Wearables, shows that on-body AI — running inference directly on wearable hardware rather than relying continuously on cloud servers — is becoming viable. This reduces latency, improves privacy, and lowers power consumption.

- Need-driven use-cases are surfacing. Beyond novelty, wearables integrated with AI offer real advantages in domains such as health-monitoring, fatigue detection, wellness tracking, real-time feedback for professions requiring hands-free operation, and ambient assistance (navigation, translation, reminders).

- Consumer readiness for non-screen modes is growing. As people grapple with screen fatigue, digital overload and privacy concerns, there’s fresh appeal in subtle, always-available assistants — especially if they can function without tethering to a phone or bogging you down with app-dependency.

- Resilience of the concept. The collapse of AI Pin acts less like a full stop and more like a “pause to recalibrate.” The technical aspiration — wearable AI that augments, not distracts — remains intact; what changes is delivery, price, design, and value proposition.

Meta reportedly sold 1 million+ units of Ray-Ban smart glasses within their first year — far outpacing expectations for any emerging wearable.

There is a fresh line-up of devices from smart glasses to AI pendants that ensures that the wearable AI dream has just faded and not eroded.

As of late 2025, several companies are stepping up with wearables that learn from Humane’s missteps — devices built not for hype, but for real-world use.

One of the most prominent examples is Meta’s newly launched Ray-Ban Meta Display — a pair of smart glasses with a built-in augmented-reality display in the right lens, plus a 12 MP camera, open-ear audio and on-device AI for live captions, translations, notifications and context-aware assistance. The Ray-Ban Display marks Meta’s first attempt at giving wearables a true heads-up interface.

Alongside that, Meta’s sporty offering Oakley Meta Vanguard — aimed at athletes and active users — pairs AI-powered cameras, environmental sensors and strong battery life to support on-the-go video, fitness tracking, and real-time AI assistance during workouts.

On a different front, companies working on biosignal and edge-AI wearables are making steady progress. For instance, a recently published platform called BioGAP-Ultra integrates multiple physiological sensors (EEG, ECG, PPG and more) with embedded AI processing — enabling real-time health monitoring, stress detection or fatigue tracking, without relying on a smartphone or cloud connection.

These aren’t buzz-first gadgets. They reflect lessons learned — better hardware design, real-world utility, clear value, and a recognition that wearable AI must offer more than novelty.

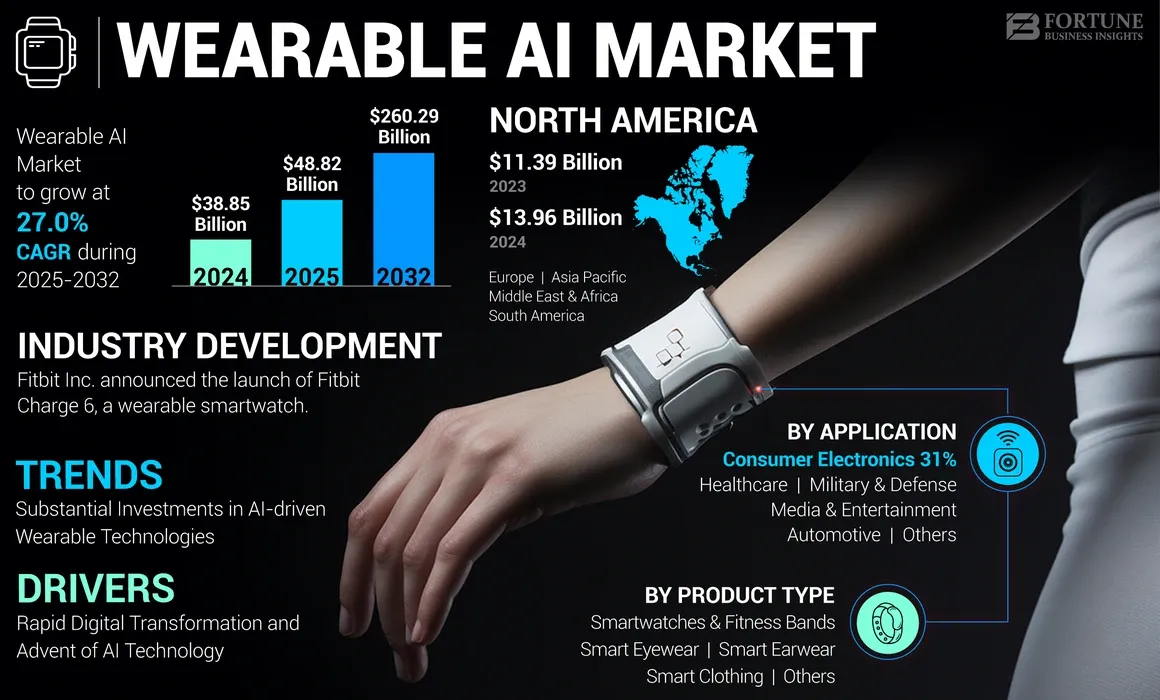

The global wearable-AI market is projected to reach $150–170 billion by 2030, growing at 25–30% CAGR, driven by health and smart-assistant devices.

What Changed ? What Makes the New Wave More Promising?

There are several underlying shifts that help explain why this new generation might succeed where the AI Pin failed.

First: on-device intelligence and optimized hardware. With advances like BioGAP-Ultra’s low-power multi-sensor processing and newer gesture/vision systems for wearables, devices can now run AI tasks locally, reducing latency, preserving privacy, and extending battery life. What once required a powerful phone or cloud server can increasingly happen inside the wearable itself.

Second: diverse, practical use-cases beyond gimmick. The new wearables target real, everyday needs — from fitness and health tracking (stress detection, fatigue monitoring) to hands-free productivity (notifications, navigation, translation) and ambient assistance (voice AI, real-time data overlay). For example, the Ray-Ban Display isn’t just a camera — it’s a contextual assistant, aiming to reduce friction in how we consume information on the go.

Third: integration with existing ecosystems. Unlike standalone experiments, many of the new devices are built by companies with established hardware or software ecosystems — Meta with its AI and social platforms; biosensor wearables aligning with health-data apps; smart glasses bridging fashion and functionality. That makes them more likely to survive beyond early adopters.

Finally: the mental model for wearables has matured. Early hype promised a “phone replacement,” but today’s wave seems humble — part-screen, part-assistant, part-sensor — more of a complementary device than a replacement. The focus is shifting away from “replace your smartphone” to “enhance your life.”

A 2024 Deloitte survey found that 41% of consumers are willing to use an AI-enabled wearable if it reduces “screen time dependency.

The collapse of the AI Pin left a big question mark. But ironically, it may have done the industry a favour by reminding engineers, investors and consumers what works.

Instead of letting hype lead design, companies are now asking tougher questions: What real value does this wearable bring? How does it integrate with people’s lives, not just their wardrobes? Can it survive weeks of real-world use — battery cycles, variable lighting, noisy environments?

The outcome? Arguably smarter bets, better hardware, and clearer positioning. The AI Pin may have failed as a mass-market device but its legacy lives on in these new wearables, re-framed for utility, not novelty.

If this second wave succeeds, wearable AI could usher in a quieter but deeper tech revolution.

We may see a world where glasses, pendants or biosensor wearables become everyday companions: giving you context-aware assistance, discreet health tracking, and seamless interaction with your digital life — without ever pulling out a phone.

For health and wellness, devices like BioGAP-Ultra–powered wearables could offer early warning of fatigue, stress or sleep issues. For professionals and creators — or even digital nomads — smart glasses or wrist-worn AI assistants could replace pockets full of gadgets with a single subtle wearable.

And for society at large, this could signal a shift: from screen-driven digital overload toward ambient, assistant-driven living. A world where AI doesn’t demand your attention, but supports your life.

If 2024–25 was the season of experimentation with some wild misses then 2026 may mark the start of wearable AI’s quiet, steady ascent.

Still, beneath the excitement lies a fragile foundation and several risks that could stall wearable AI before it matures.

The biggest is privacy. A device that sees what you see, hears what you hear and measures how your body reacts to the world — that’s not a gadget, that’s an intimate witness. Glasses with AI cameras could easily be perceived as surveillance tools. Bio-sensing wearables could collect stress signals in environments where psychological privacy matters. If companies don’t set strong data boundaries early, public pushback could mirror the backlash Google Glass faced a decade ago only louder.

There’s also the battery-and-heat problem. The laws of thermodynamics haven’t changed: squeezing high-performance AI into tiny frames generates heat. If wearables constantly throttle themselves to avoid overheating, the “AI assistant on your body” dream becomes just as sluggish as the AI Pin was in its early reviews.

And then comes social acceptance. Wearables live on your face, chest or wrist and not in your pocket. The wrong design can become the next Segway or Google Glass: ahead of its time, but awkward in public. And if these devices routinely misunderstand commands, flash unwanted notifications, or accidentally record conversations, the social stigma could be swift.

Most critically: platform fatigue is real. Consumers have already lived through VR hype cycles, crypto winters and AI-app boom-and-bust stories. If wearable AI overpromises one more time, the whole category could be dismissed long before it reaches its maturity curve.

In the end, wearable AI will succeed or fail not on technology alone, but on restraint of how much ambition companies can resist while building something truly helpful.

Humane tried to leapfrog the smartphone era in one bold jump — and fell into every trap a first mover could. But its failure wasn’t a dead end. It was a correction. Other companies studied the wreckage and built differently: slower, quieter, more grounded in reality.

Now the second wave of wearable AI is taking shape. Not as a flashy revolution, but as a gradual shift in how we compute.

If it works, we may soon live in a world where:

we don’t reach for our phones for every small task

we don’t drown in notifications

we don’t live glued to glowing rectangles

and yet we remain more assisted not less by the technology around us.

The AI Pin didn’t deliver on that vision.

But it sparked the movement that might.

The next chapter of wearable AI won’t be written by the boldest company — but by the one that builds the quietest, most useful tool.

A device that doesn’t scream “future,” but simply disappears into your life.

If that happens, Humane’s collapse won’t be remembered as the failure of wearable AI. It will be remembered as the moment the category grew up.

See you in our next article!

If this article helped you explore the Humane AI Pin, check out our recent stories on GPT Store, Apple AI, Runway AI, Lovable 2.0 and MAI-IMAGE 1. Share this with a friend who’s curious about where AI is heading next. Until next brew☕