Multimodal AI: Teaching Machines to See, Hear, and Understand Together

What if an AI could watch a video, read a transcript, and then explain it to you in plain English?

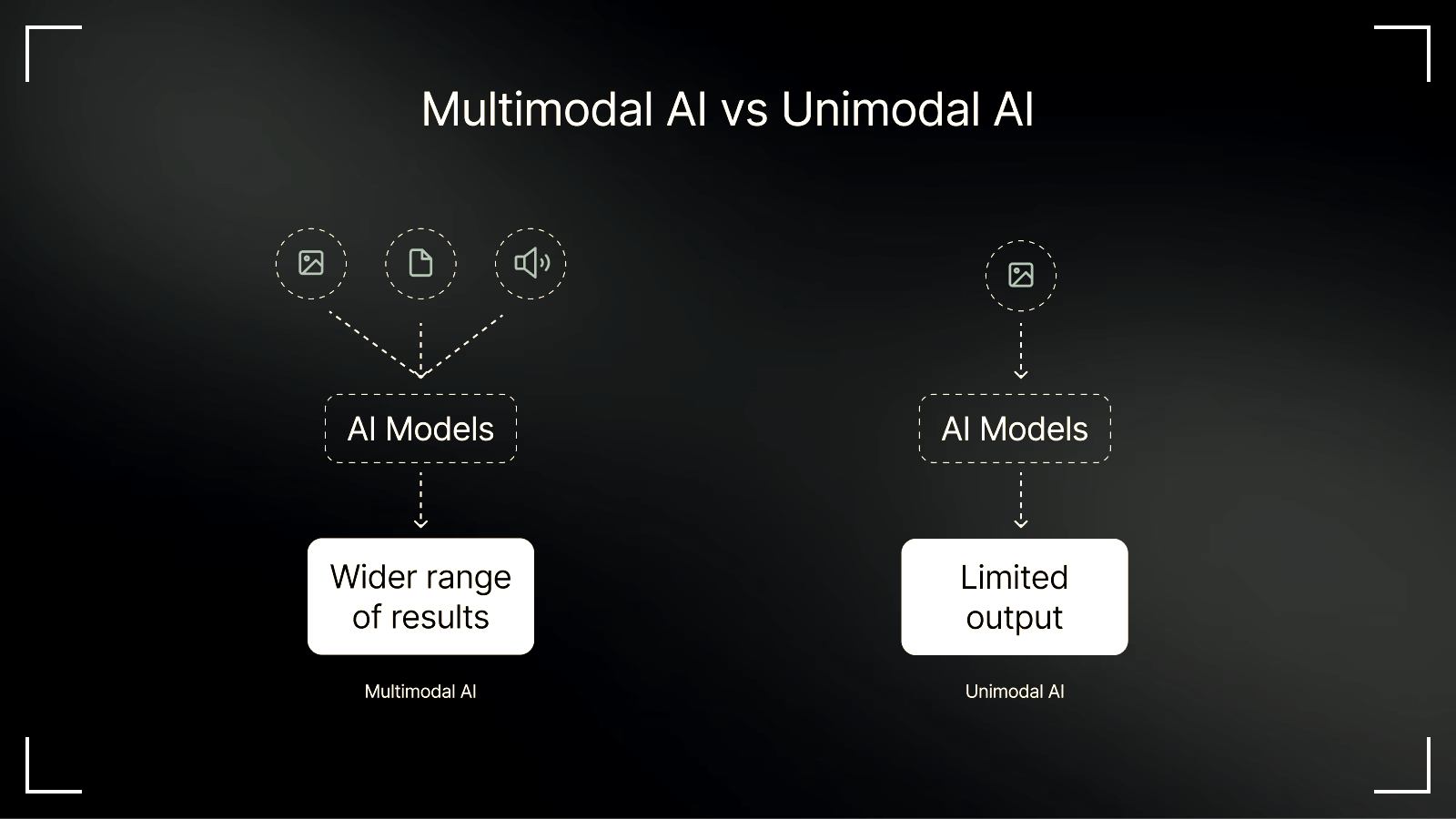

That’s exactly the promise of multimodal AI — models that don’t just work with text or images alone, but can understand and connect across text, images, video, and even audio.

For years, AI felt a bit one-dimensional. A text model could chat with you, but it couldn’t “see.” An image model could create a painting, but it couldn’t “explain” it. But in reality, the world isn’t split into neat boxes. A news report, a medical scan, a lecture video — they all mix text, visuals, and sound together. And that’s where multimodal AI comes in.

Take healthcare, for example. Imagine a doctor uploading an X-ray scan along with a patient’s medical notes. Traditionally, these would be looked at separately — a radiologist would study the scan, while another doctor would review the patient’s history, symptoms, and lab results. This takes time, and sometimes important connections are missed. With multimodal AI, the process changes completely. The model doesn’t just analyze the X-ray; it also reads the patient’s notes, picks up on keywords like “chronic cough” or “family history of lung issues,” and cross-references them with what it sees in the scan. It can even bring in external medical literature to check if similar patterns were reported elsewhere.

The result? The AI could flag an early sign of pneumonia or even a potential tumor that might otherwise take days (and multiple specialists) to identify. This means faster diagnosis, reduced errors, and potentially life-saving decisions made in real time.

Let's see how the Education Sector can be impacted!

Imagine a teacher recording a one-hour lecture on, say, photosynthesis. Traditionally, the teacher would then spend hours preparing supporting material: typing out notes, creating quizzes, designing revision slides, and maybe even editing clips for students who missed class. It’s a huge workload.

Now picture multimodal AI stepping in. The model doesn’t just transcribe the teacher’s words into text — it also understands the diagrams on the slides, picks up on the emphasis in the teacher’s tone (like when they stress “chlorophyll is essential”), and recognizes how the lecture is structured from introduction to conclusion. From this, the AI automatically generates a clean summary sheet for students, creates a set of quiz questions tailored to the content, and even slices the lecture video into bite-sized clips for quick revision — say, a 2-minute explainer on “light-dependent reactions.” What once took hours of manual effort can now be done in minutes.

Teachers will save time, students will get personalized learning materials instantly, and education as a whole will become more interactive and efficient.

Even in business, the impact is clear. Picture this: a company feeds in a Zoom call recording, the slide deck used in the meeting, and the latest financial report. A multimodal AI can merge all of it — identifying action items, risks, and opportunities. For professionals buried in information overload, that’s like having an assistant who never forgets and sees the full picture.

If the examples have raised interest in you, allow me to explain the tech behind it!

In simple terms, multimodal AI works by turning every kind of data — words, images, sounds, video frames — into numbers. Think of these numbers as a kind of “digital fingerprint” of the data, called embeddings.

For example:

- A sentence like “The cat is on the mat” becomes a set of numbers that represent its meaning.

- An image of a cat also gets turned into a set of numbers capturing its features — whiskers, ears, fur color.

- Even a short audio clip of someone saying “cat” is converted into numbers that represent the sound pattern.

Once all these different data types are translated into numbers, the AI pulls them into a shared space — almost like putting everyone in the same room and teaching them the same language. Here, the AI learns how the different modalities connect: the word “cat”, the picture of a cat, and the sound of someone meowing all map closely together.

This is why multimodal AI can do things like:

- Look at an image and describe it in words (“A brown cat sitting on a mat”).

- Watch a video and answer questions about it (“What color is the car at the end of the clip?”).

- Listen to an audio file and match it to the right transcript.

Behind the scenes, it’s all numbers — but because the AI has learned how those numbers from text, images, video, and audio relate, it can “connect the dots” across formats in ways traditional models never could.

Now, as the efficiency of Multimodel AI kept on increasing and continues to prove its worth, major tech companies are not just investing funds — they’re expanding teams, building new infrastructure, and making hires that show they want to lead.

OpenAI, for example, is hiring multiple roles focused entirely on multimodal tech: there’s a Technical Lead for Multimodal Infrastructure and engineering roles for “Inference – Multi-Modal” that ensure models handling images, audio, and video can run smoothly at scale.They’re also hiring research scientists whose job is to improve how the AI reasons across modalities — handling video speech-to-speech, spatial and temporal relationships, and making models that understand changes over time.

Google is doing the same. The Gemini family (Ultra, Pro, Nano) is one of its biggest bets in years — models built from the ground up to process text, images, code, and more, natively. At Google I/O, it was revealed that Gemini 2.0 uses custom hardware like its Trillium-series TPUs, showing that they’re building both software and infrastructure for scale.

These moves aren’t minor. OpenAI is even recruiting senior engineers from DeepMind who specialize in multimodal development. And with the rising cost of compute and memory, OpenAI is negotiating with chip manufacturers like Samsung, SK Hynix, etc., to secure hardware partnerships so they can handle the load.

Now that is scary and interesting at the same time. You can never predict what these companies will come up with next! They can surprise you, may take your job, increase your efficiency, or you may end up becoming a millionaire if you use it creatively!

If that’s very, very clear to you, we will be stopping here, with the hope that soon an AI tool will use this article to explain to tech enthusiasts.